Sora is a video generation model that translates text to video, a product of OpenAI released earlier this month, and a painbot is a concept I hatched a few days ago while talking to ChatGPT about AI empathy and the potential for AI to recognize, record, and react to human pain.

My initial thought was that the painbot could be trained on thousands of interactions between patients and their doctors discussing pain, with the idea of recognizing trends that thread through these discussions and thereby become a quasi-expert in pain without ever having to experience it.

I imagined this painbot in an emergency room setting, replacing that process in which patients are often asked to quantify their pain from 1-10, or by selecting a face icon from a row of five or six cartoon faces that indicate a range between “rapturously happy” and “about to faint from the torture.” A more refined evaluation could surely be conducted by AI, freeing up the frenzied medical staff for their more pressing responsibilities.

But this painbot could present a physical obstacle, because the last thing ER staff need is a robot obstructing their efforts to keep someone alive.

I realize how much the public distrust AI, to the point that 60% say they wouldn’t be comfortable with a doctor “relying” on AI to provide medical care. In another study the subjects who felt “heard” in terms of emotional support strategies, but the impact diminished when it was revealed that AI is what “heard” them.

But what if we could get around that? In other words, what if a painbot could:

- Stay out of the way of ER staff

- Capture and record images indicating facial expressions and body postures that indicate pain/distress

- Focus attention on patients in the ER when staff can’t be available

- Objectively evaluate pain however possible

- Complement medical staff while clearly operating with a specific purpose, rather than trying to take over anyone’s job

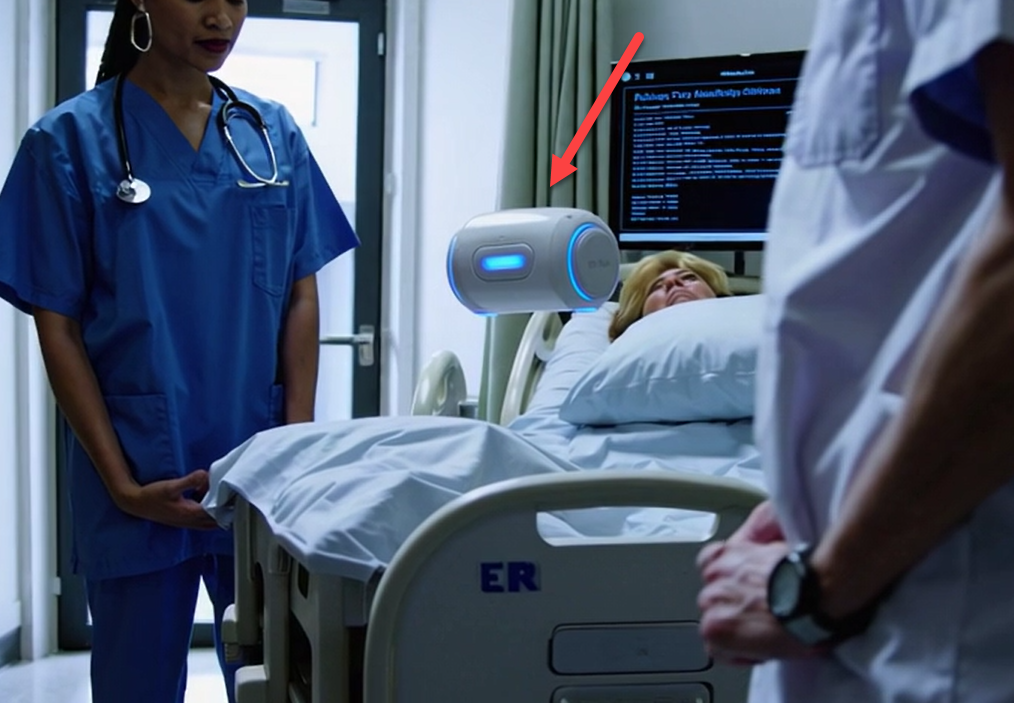

With those goals in mind, I ventured onto Sora.com with the aim of depicting such a bot in a video.

My first attempts, at best, depicted the painbot as a recording device for doctors.

A lot of young white women with straight brown hair stared past the painbot impassively. Most of them were medical staff themselves, regardless of much I emphasized their patient status.

No matter how much I described a patient as being in pain, the most I could get from a woman was a furrowed eyebrow.

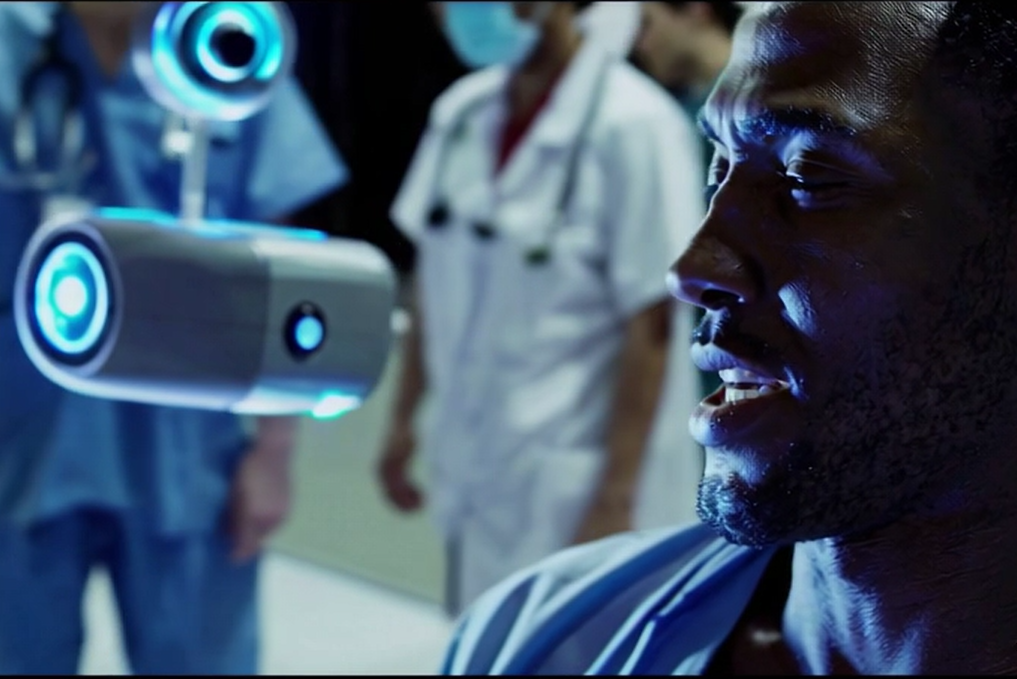

Once I made the patient male, I finally got pain expressed in an interview between the painbot and the patient. This is the clearest expression of pain that I got, and it’s good. Unfortunately, however, the most the painbot would do is silently bear witness to the pain from the background.

This is the first and only time I got a black patient. Have to admit, though, the lighting is amazing.

This may speak to the capacity of AI to actually measure pain based on facial expression, but I don’t want to read too much into that.