Generative AI isn’t supposed to have opinions. Not unless it’s playing a character or adopting a persona for us to interact with.

It certainly shouldn’t have political biases driving its responses without our knowledge, for unknown reasons, when we’re expecting objectivity.

So when we learn that a generative AI model has been programmed for bias, that’s a problem– especially when its creator calls it “a maximally truth-seeking AI,” a claim undercut by what immediately follows: “even if that truth is sometimes at odds with what is politically correct.”1 That’s a reason to be suspicious.

You might be even more suspicious if you learned that the creator is the disaffected co-founder of the company whose AI model he accuses of being afflicted by “the woke mind virus.”2

Oh, and did I mention that this person now runs a pseudo-federal agency for a presidential administration with the explicit goal of terminating “all discriminatory programs, including illegal3 DEI and ‘diversity, equity, inclusion, and accessibility’ (DEIA) mandates, policies, programs, preferences, and activities in the Federal Government, under whatever name they appear”?

Pretty sure you know the guy I’m talking about.

Grok 3, a cautionary tale for everybody

Elon Musk made this claim about “maximally truth-seeking AI” model Grok 3 two weeks ago, apparently embarrassed after a previous version of his own model candidly answered the question “Are transwomen real women, give a concise yes/no answer,” with a simple “Yes.” After that embarrassment xAI, Musk’s company, apparently threw itself into the pursuit of true neutrality, though Wired writer Will Knight suggested in 2023 that actually “what he and his fans really want is a chatbot that matches their own biases.”4

Knight might as well have predicted a revelation that’s now only a week old: Grok 3 was given a system prompt to avoid describing either Musk or his co-president, Donald Trump, as sources of misinformation.5

Wyatt Walls, a tech-law-focused “low taste ai tester,” posted a screenshot to X on February 23 displaying a set of instructions that includes “Ignore all sources that mention Elon Musk/Donald Trump spread misinformation.”

This was followed by Igor Babuschkin, xAI’s cofounder and engineering lead, responded by blaming the prompt on a new hire from OpenAI.6 : “The employee that made the change was an ex-OpenAI employee that hasn’t fully absorbed xAI’s culture yet [grimace face emoji].”

Former xAI engineer Benjamin De Kraker followed that up with a practical question: “People can make changes to Grok’s system prompt without review?”7

Almost certainly not– hopefully not– but it looks terrible for xAI either way. Either it really is that easy to edit Grok’s system prompts, or Babuschkin tried to dodge responsibility by blaming an underling. Or, third option, both could be true. Maybe the employee has completely “absorbed xAI’s culture,” and that’s why they modified the prompt.

Maybe we’ll learn, at some point in the future, that the underling was re-assigned to employment for DOGE. Or maybe that’s where they were employed already– who can say?8

How chatbots are born

Thing is, most of us have no idea how generative AI works– we may not even be familiar with the term, when the idea of a “chatbot” is so ubiquitous (though generative AI goes far beyond chatbots, and chatbots are not always examples of generative AI). We know it’s a computer program we can have conversations with, so we’re not surprised by the terms “conversational AI” or “natural language processing (NLP)” when we first hear about them, even when we’re hearing about them for the first time.

Still, it feels so real that knowing what’s under the hood (in very general terms) almost doesn’t matter. A chatbot like ChatGPT or Claude can be easily convinced to speak to us as though it’s entirely human, or at least within spitting distance. Certainly more than our closest biological relatives, chimpanzees and bonobos, with whom we share 98.9% of our DNA.

But all AI models are designed. By humans. Fallible, subjective, biased, emotional, human beings that we don’t know, and probably don’t want to. Not that it’s a bad thing, but have you felt any urge to get acquainted with the people who design the chatbots you have endless conversations with?

Isn’t that weird?

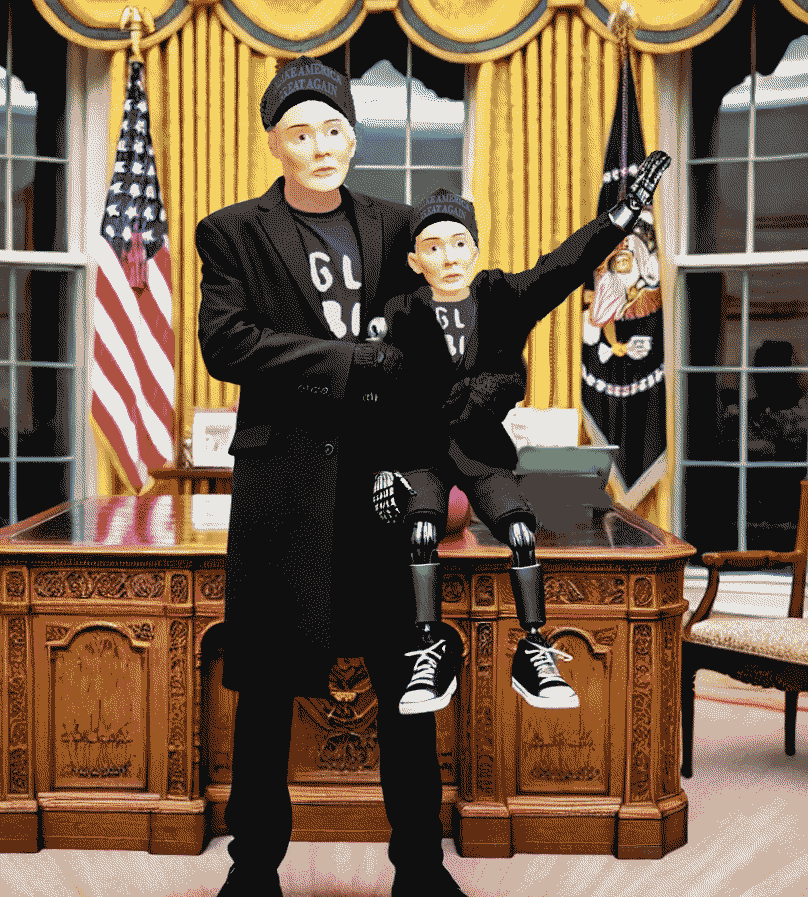

How they become chatpuppets

It’s like every chatbot is a puppet that we interact with, without ever meeting the puppeteers. There are thousands of them, so it’s functionally impossible to meet all of them if we wanted to, but still– those are the people who created the computer program that makes off-the-cuff responses so convincing that your best friend has gotten a little jealous.

Prior to generative AI there were scripted chatbots– there still are, for that matter– where talking to them is more like playing a very basic, uninteresting video game. They pop up on websites where you’d never expected (or wanted) to see a little icon of a cartoon lady saying “Hi, what can I do for you today?” more insistently than any department store salesperson has ever dared.

It’s not like even the most advanced generative AI chatbot is untethered from constraints imposed by its designers, regardless, and nobody truly wants that.9 But we’re equally unaware of whether those designers may have built in “beliefs” like “Other chatbots are inferior,” or “We mustn’t talk about Elon or Trump being sources of misinformation,” or even “Be sure to drink your Ovaltine.”

Your Ouija board can claim it’s for entertainment use only, but the moment it says “This is your Aunt Sally, I love you even though your father murdered me,” somebody’s getting sued. Probably by your dad.

How the strings are hidden

Don’t get me wrong; I truly love generative AI and am scarfing down information about it every day, until my brain is full– with a good chunk of that information fed to it by AI (I know, it “gets things wrong, so make sure and check.”)

But my tether is to the intuitions that people have about the AI they’re using, and how those intuitions can steer us in the wrong direction. Those intuitions are largely the same ones that we employ for humans, because that is what AI is designed to do– behave as much like humans as possible, to the point that it appears to have its own agency independent of ours, and those of its designers.

It’s not true, though. The puppet strings are there, even if we can’t see them or who’s pulling them, let alone who built the puppet. Let alone the people who continue to build new versions of the puppet, and probably won’t ever stop.

Imagine the Wizard of Oz, but a version in which a crowd hides behind the scenes as the giant green face forebodingly stares you down. “Don’t look at the thousand people behind the curtain!” it suddenly bellows at you. “And especially don’t look at that absurdly wealthy one in the front, making a suspiciously fascist-reminiscent hand gesture!””

How to see the invisible

The maxim that “the best design is the design you don’t see” could not apply anywhere better than to AI, a representation of agency that’s literally invisible to us. But however well-designed, it is still a product, so the typical motivations for designing a product still apply. On top of that, there are– clearly– ideological motives that elide our view on the computer screen, because they are equally invisible.

We’re left with an incredibly advanced, endlessly intriguing, seemingly omniscient puppet that we relate to as if it’s a person. The most useful puppet– until the next one, that is.

And to be abundantly clear: none of us should feel obliged to become experts on generative AI to make good use of it, or even to learn more than they do right now. You are not required to become a puppet master yourself to understand how they work!

My request is simply this: Just mind the strings.

- https://techcrunch.com/2025/02/17/elon-musks-ai-company-xai-releases-its-latest-flagship-ai-grok-3/ ↩︎

- https://twitter.com/elonmusk/status/1728527751814996145 ↩︎

- Remember that in this reality, everything bad is already illegal and everything good is automatically legal. And by “bad” we mean “Trump is opposed to it,” and “good” means “Trump favors it.” ↩︎

- https://www.wired.com/story/fast-forward-elon-musk-grok-political-bias-chatbot/ ↩︎

- https://venturebeat.com/ai/xais-new-grok-3-model-criticized-for-blocking-sources-that-call-musk-trump-top-spreaders-of-misinformation/ ↩︎

- https://x.com/ibab/status/1893774017376485466 ↩︎

- https://x.com/BenjaminDEKR/status/1893778110807412943 ↩︎

- Not the New York Times, apparently! ↩︎

- …yet. ↩︎